Downloading Subsets of ECCO Datasets#

Andrew Delman, updated 2024-10-08

The ecco_access tutorial went through the steps needed to download ECCO datasets using Python code, using mode = download.

But what if you don’t want to download the entire global domain of ECCO? The NASA Earthdata search interface and the podaac_data_downloader utility both provide lat/lon subsetting, but this can’t be used for the native llc90 grid of ECCO files. However, PO.DAAC does also make its datasets available through OPeNDAP, and this enables spatial subsetting of the ECCO datasets. The ecco_access libraries include the download_subset access mode which exploits OPeNDAP capabilities so they can be invoked easily from your Python script or notebook. Here are some ways this function can be used to subset ECCO files prior to download, along with possible use cases:

- Regional subsetting (e.g., budget analyses that span many time granules but only a single tile or 2 adjacent tiles)

- Depth subsetting (e.g., looking at SST or SSS, or the upper ocean only)

- Data variable subsetting (e.g., downloading one SSH data variable instead of the four that are included in SSH datasets)

- Time subsetting in non-continuous ranges (e.g., downloading boreal summer files from multiple years)

Currently

ecco_accessis a library of modules in the ECCO-v4-Python-Tutorial and ECCO Hackweek ecco-2024 repos. However, we hope to either release it as a standalone package or include it in theecco_v4_pypackage soon. In the meantime you can create a symbolic link to these libraries from the directory of your notebook, e.g.,ln -s ~/ecco-2024/ecco_access {notebook_dir}and then import to your notebook:import ecco_access as ea.

Getting Started#

Before using ecco_access, you need your NASA Earthdata login credentials in your local netrc file–if you don’t yet, follow the steps here.

Let’s look at the syntax of the ecco_podaac_download_subset function:

from ecco_access import ea

help(ea.ecco_podaac_download_subset)

Help on function ecco_podaac_download_subset in module ecco_access.ecco_download:

ecco_podaac_download_subset(ShortName, StartDate=None, EndDate=None, n_workers=4, force_redownload=False, vars_to_include='all', vars_to_omit=None, times_to_include='all', k_isel=[0, 50, 1], tile_isel=[0, 13, 1], j_isel=[0, 90, 1], i_isel=[0, 90, 1], Z_isel=[0, 50, 1], latitude_isel=[0, 360, 1], longitude_isel=[0, 720, 1], netcdf4=True, include_latlon_coords=True, download_or_list='download', list_filename='files_to_download.txt', download_root_dir=None, subset_file_id='')

Downloads subsets of ECCOv4r4 datasets from PO.DAAC using OPeNDAP.

This routine downloads ECCO datasets from PO.DAAC. It is adapted by Andrew Delman from the

ecco_podaac_download routine derived from the Jupyter notebooks created by Jack McNelis and Ian Fenty,

with some code from the OPeNDAP subsetting download script by Toshio Mike Chin and Y. Jiang

(https://github.com/nasa/podaac_tools_and_services/blob/master/subset_opendap/subset_dataset.py).

Parameters

----------

ShortName: str, the ShortName that identifies the dataset on PO.DAAC.

StartDate,EndDate: str, in 'YYYY', 'YYYY-MM', or 'YYYY-MM-DD' format,

define date range [StartDate,EndDate] for download.

EndDate is included in the time range (unlike typical Python ranges).

ECCOv4r4 date range is '1992-01-01' to '2017-12-31'.

For 'SNAPSHOT' datasets, an additional day is added to EndDate to enable closed budgets

within the specified date range.

If StartDate or EndDate are not specified, they are inferred from times_to_include;

if times_to_include is also not specified an error is returned.

n_workers: int, number of workers to use in concurrent downloads.

force_redownload: bool, if True, existing files will be redownloaded and replaced;

if False, existing files will not be replaced.

vars_to_include: list or tuple, names of data variables to include in the downloaded files.

Dimension and coordinate variables are automatically included,

except for the lat/lon coordinate variables when include_latlon_coords=False.

Default is 'all', i.e., to include all data variables in the dataset.

vars_to_omit: list or tuple, names of variables to exclude from the downloaded files.

Default is None, i.e., to include all variables in the dataset.

If both vars_to_include and vars_to_omit are specified,

vars_to_include takes precedence, unless

vars_to_include='all' in which case vars_to_omit takes precedence.

times_to_include: 'all' or list, tuple, or NumPy array.

Indicates the specific days or months to be downloaded, within the StartDate,EndDate

time range specified previously.

If a list/tuple/NumPy array is given, it must consist either of strings of the format

'YYYY', 'YYYY-MM', or 'YYYY-MM-DD', or of NumPy datetime64 objects,

e.g., np.datetime64('YYYY-MM-DD').

This may be useful for downloading specific years,

specific times of the year from multiple years, or specific days of the month.

If a 'YYYY' string or np.datetime64[Y] object is given, all months or days in the given year

will be included.

If a 'YYYY-MM' string or np.datetime64[M] object is given but the ShortName indicates

daily temporal resolution, all of the days in that month will be included.

If a 'YYYY-MM-DD' string or np.datetime64[D] object is given but the ShortName indicates

monthly temporal resolution, the given string/object will be truncated to 'YYYY-MM'.

For 'SNAPSHOT' datasets where a year/month string or np.datetime64 object type is included,

the first of the following month will also be included

(to enable budget closure for the last month).

Default is 'all', which downloads all files within the StartDate,EndDate time range.

k_isel,tile_isel,j_isel,i_isel,

Z_isel,latitude_isel,longitude_isel: 3-element lists, tuples, or NumPy arrays.

Enables spatial subsetting, either in the native grid or lat/lon domain,

by defining the indices to download for each dimension

in the format [start,end,stride] (using Python indexing conventions

where 0 is the first index and end is not included).

Note: only index ranges with consistent spacing can be downloaded

(e.g., downloading tiles 0,1,3,4 would need to be done either with

tile_isel=[0,5,1] or as two separate downloads [0,2,1] and [3,5,1]).

Defaults to the full range of each dimension.

If indices are specified but the dimension does not exist in the files

(e.g., tile_isel is specified but the ShortName is for a lat/lon regridded

dataset), the index specification is ignored.

netcdf4: bool, indicates whether to download files as NetCDF4 or (classic) NetCDF3 files.

include_latlon_coords: bool, indicates whether to include lat/lon coordinate variables in the

native grid downloaded files.

Default is True. For the download of a large number of files (especially daily files),

False is recommended to reduce the size of the download.

Use the grid file instead to obtain the lat/lon coordinate variables.

If downloading the grid file, or if downloading a lat/lon re-mapped data file,

this option is ignored and the coordinates are included regardless.

download_or_list: ('download' or 'list'), indicates whether to download the files,

or output download URLs to a text file to be downloaded later (e.g., using wget or curl).

Default is 'download'.

The options after this apply to either 'list' or 'download',

if not relevant they are ignored.

if download_or_list == 'list':

list_filename: str, path and filename of text file to write download URLs to.

Default is 'urls_to_download.txt' in the current working directory.

If list_filename already exists, output will be appended to existing file.

if download_or_list == 'download':

download_root_dir: str, defines parent directory to download files to.

Files will be downloaded to directory download_root_dir/ShortName/.

If not specified, parent directory defaults to '~/Downloads/ECCO_V4r4_PODAAC/'.

subset_file_id: str, identifier appended to each downloaded file to identify it as a subset.

Default is to not append an identifier.

return_downloaded_files: bool, if True, string or list of downloaded file(s) (including files that were already on disk

and not replaced) is returned.

if False (default), the function returns nothing.

Returns

-------

downloaded_files: str or list, downloaded file(s) with local path that can be passed

directly to xarray (open_dataset or open_mfdataset).

Only returned if download_or_list='download' and return_downloaded_files=True.

There are a lot of options with this function! If you have used the ecco_podaac_download function, you’ll notice the first few options are the same; most importantly, we need to provide a StartDate, EndDate, and ShortName every time the function is called, otherwise it will return an error. The ShortName of each ECCO dataset along with the associated variables and brief descriptions can be found here.

The examples in this tutorial use the

ecco_podaac_download_subsetfunction directly, but the same functionality is provided by usingecco_podaac_accesswith mode =download_subset(inecco_podaac_access,return_granulesis set to True by default).ecco_podaac_to_xrdatasetcan be used to download the subsetted files and open them as an xarray Dataset in a single command.

A few use cases are probably the best way to see what this function can do, so let’s try some.

Example 1: Downloading monthly SSH in the North Atlantic#

Say we want to look at SSH variability in the North Atlantic on the native grid. We could download granules of the full SSH dataset using ecco_podaac_download, e.g., for each of the months in the year 2000:

ea.ecco_podaac_download(ShortName='ECCO_L4_SSH_LLC0090GRID_MONTHLY_V4R4',StartDate='2000-01',EndDate='2000-12')

created download directory C:\Users\adelman\Downloads\ECCO_V4r4_PODAAC\ECCO_L4_SSH_LLC0090GRID_MONTHLY_V4R4

Total number of matching granules: 12

DL Progress: 100%|#########################| 12/12 [00:10<00:00, 1.19it/s]

=====================================

total downloaded: 71.01 Mb

avg download speed: 7.02 Mb/s

Time spent = 10.118713617324829 seconds

Now look at the contents of these SSH files by loading them as a dataset with xarray:

import xarray as xr

from os.path import join,expanduser

ds_SSH_mon_2000 = xr.open_mfdataset(join(expanduser('~'),'Downloads','ECCO_V4r4_PODAAC',\

'ECCO_L4_SSH_LLC0090GRID_MONTHLY_V4R4',\

'*2000*.nc'))

ds_SSH_mon_2000

<xarray.Dataset>

Dimensions: (i: 90, i_g: 90, j: 90, j_g: 90, tile: 13, time: 12, nv: 2, nb: 4)

Coordinates: (12/13)

* i (i) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* i_g (i_g) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* j (j) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* j_g (j_g) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* tile (tile) int32 0 1 2 3 4 5 6 7 8 9 10 11 12

* time (time) datetime64[ns] 2000-01-16T12:00:00 ... 2000-12-16T12:00:00

... ...

YC (tile, j, i) float32 dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

XG (tile, j_g, i_g) float32 dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

YG (tile, j_g, i_g) float32 dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

time_bnds (time, nv) datetime64[ns] dask.array<chunksize=(1, 2), meta=np.ndarray>

XC_bnds (tile, j, i, nb) float32 dask.array<chunksize=(13, 90, 90, 4), meta=np.ndarray>

YC_bnds (tile, j, i, nb) float32 dask.array<chunksize=(13, 90, 90, 4), meta=np.ndarray>

Dimensions without coordinates: nv, nb

Data variables:

SSH (time, tile, j, i) float32 dask.array<chunksize=(1, 13, 90, 90), meta=np.ndarray>

SSHIBC (time, tile, j, i) float32 dask.array<chunksize=(1, 13, 90, 90), meta=np.ndarray>

SSHNOIBC (time, tile, j, i) float32 dask.array<chunksize=(1, 13, 90, 90), meta=np.ndarray>

ETAN (time, tile, j, i) float32 dask.array<chunksize=(1, 13, 90, 90), meta=np.ndarray>

Attributes: (12/57)

acknowledgement: This research was carried out by the Jet Pr...

author: Ian Fenty and Ou Wang

cdm_data_type: Grid

comment: Fields provided on the curvilinear lat-lon-...

Conventions: CF-1.8, ACDD-1.3

coordinates_comment: Note: the global 'coordinates' attribute de...

... ...

time_coverage_duration: P1M

time_coverage_end: 2000-02-01T00:00:00

time_coverage_resolution: P1M

time_coverage_start: 2000-01-01T00:00:00

title: ECCO Sea Surface Height - Monthly Mean llc9...

uuid: a7c2a1c4-400c-11eb-9f79-0cc47a3f49c3- i: 90

- i_g: 90

- j: 90

- j_g: 90

- tile: 13

- time: 12

- nv: 2

- nb: 4

- i(i)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- X

- long_name :

- grid index in x for variables at tracer and 'v' locations

- swap_dim :

- XC

- comment :

- In the Arakawa C-grid system, tracer (e.g., THETA) and 'v' variables (e.g., VVEL) have the same x coordinate on the model grid.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - i_g(i_g)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- X

- long_name :

- grid index in x for variables at 'u' and 'g' locations

- c_grid_axis_shift :

- -0.5

- swap_dim :

- XG

- comment :

- In the Arakawa C-grid system, 'u' (e.g., UVEL) and 'g' variables (e.g., XG) have the same x coordinate on the model grid.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - j(j)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- Y

- long_name :

- grid index in y for variables at tracer and 'u' locations

- swap_dim :

- YC

- comment :

- In the Arakawa C-grid system, tracer (e.g., THETA) and 'u' variables (e.g., UVEL) have the same y coordinate on the model grid.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - j_g(j_g)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- Y

- long_name :

- grid index in y for variables at 'v' and 'g' locations

- c_grid_axis_shift :

- -0.5

- swap_dim :

- YG

- comment :

- In the Arakawa C-grid system, 'v' (e.g., VVEL) and 'g' variables (e.g., XG) have the same y coordinate.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - tile(tile)int320 1 2 3 4 5 6 7 8 9 10 11 12

- long_name :

- lat-lon-cap tile index

- comment :

- The ECCO V4 horizontal model grid is divided into 13 tiles of 90x90 cells for convenience.

- coverage_content_type :

- coordinate

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12])

- time(time)datetime64[ns]2000-01-16T12:00:00 ... 2000-12-...

- long_name :

- center time of averaging period

- axis :

- T

- bounds :

- time_bnds

- coverage_content_type :

- coordinate

- standard_name :

- time

array(['2000-01-16T12:00:00.000000000', '2000-02-15T12:00:00.000000000', '2000-03-16T12:00:00.000000000', '2000-04-16T00:00:00.000000000', '2000-05-16T12:00:00.000000000', '2000-06-16T00:00:00.000000000', '2000-07-16T12:00:00.000000000', '2000-08-16T12:00:00.000000000', '2000-09-16T00:00:00.000000000', '2000-10-16T12:00:00.000000000', '2000-11-16T00:00:00.000000000', '2000-12-16T12:00:00.000000000'], dtype='datetime64[ns]') - XC(tile, j, i)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- longitude of tracer grid cell center

- units :

- degrees_east

- coordinate :

- YC XC

- bounds :

- XC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- longitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 55 Tasks 1 Chunks Type float32 numpy.ndarray - YC(tile, j, i)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- latitude of tracer grid cell center

- units :

- degrees_north

- coordinate :

- YC XC

- bounds :

- YC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- latitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 55 Tasks 1 Chunks Type float32 numpy.ndarray - XG(tile, j_g, i_g)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- longitude of 'southwest' corner of tracer grid cell

- units :

- degrees_east

- coordinate :

- YG XG

- comment :

- Nonuniform grid spacing. Note: 'southwest' does not correspond to geographic orientation but is used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- standard_name :

- longitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 55 Tasks 1 Chunks Type float32 numpy.ndarray - YG(tile, j_g, i_g)float32dask.array<chunksize=(13, 90, 90), meta=np.ndarray>

- long_name :

- latitude of 'southwest' corner of tracer grid cell

- units :

- degrees_north

- coordinate :

- YG XG

- comment :

- Nonuniform grid spacing. Note: 'southwest' does not correspond to geographic orientation but is used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- standard_name :

- latitude

Array Chunk Bytes 411.33 kiB 411.33 kiB Shape (13, 90, 90) (13, 90, 90) Count 55 Tasks 1 Chunks Type float32 numpy.ndarray - time_bnds(time, nv)datetime64[ns]dask.array<chunksize=(1, 2), meta=np.ndarray>

- comment :

- Start and end times of averaging period.

- coverage_content_type :

- coordinate

- long_name :

- time bounds of averaging period

Array Chunk Bytes 192 B 16 B Shape (12, 2) (1, 2) Count 36 Tasks 12 Chunks Type datetime64[ns] numpy.ndarray - XC_bnds(tile, j, i, nb)float32dask.array<chunksize=(13, 90, 90, 4), meta=np.ndarray>

- comment :

- Bounds array follows CF conventions. XC_bnds[i,j,0] = 'southwest' corner (j-1, i-1), XC_bnds[i,j,1] = 'southeast' corner (j-1, i+1), XC_bnds[i,j,2] = 'northeast' corner (j+1, i+1), XC_bnds[i,j,3] = 'northwest' corner (j+1, i-1). Note: 'southwest', 'southeast', northwest', and 'northeast' do not correspond to geographic orientation but are used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- long_name :

- longitudes of tracer grid cell corners

Array Chunk Bytes 1.61 MiB 1.61 MiB Shape (13, 90, 90, 4) (13, 90, 90, 4) Count 55 Tasks 1 Chunks Type float32 numpy.ndarray - YC_bnds(tile, j, i, nb)float32dask.array<chunksize=(13, 90, 90, 4), meta=np.ndarray>

- comment :

- Bounds array follows CF conventions. YC_bnds[i,j,0] = 'southwest' corner (j-1, i-1), YC_bnds[i,j,1] = 'southeast' corner (j-1, i+1), YC_bnds[i,j,2] = 'northeast' corner (j+1, i+1), YC_bnds[i,j,3] = 'northwest' corner (j+1, i-1). Note: 'southwest', 'southeast', northwest', and 'northeast' do not correspond to geographic orientation but are used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- long_name :

- latitudes of tracer grid cell corners

Array Chunk Bytes 1.61 MiB 1.61 MiB Shape (13, 90, 90, 4) (13, 90, 90, 4) Count 55 Tasks 1 Chunks Type float32 numpy.ndarray

- SSH(time, tile, j, i)float32dask.array<chunksize=(1, 13, 90, 90), meta=np.ndarray>

- long_name :

- Dynamic sea surface height anomaly

- units :

- m

- coverage_content_type :

- modelResult

- standard_name :

- sea_surface_height_above_geoid

- comment :

- Dynamic sea surface height anomaly above the geoid, suitable for comparisons with altimetry sea surface height data products that apply the inverse barometer (IB) correction. Note: SSH is calculated by correcting model sea level anomaly ETAN for three effects: a) global mean steric sea level changes related to density changes in the Boussinesq volume-conserving model (Greatbatch correction, see sterGloH), b) the inverted barometer (IB) effect (see SSHIBC) and c) sea level displacement due to sea-ice and snow pressure loading (see sIceLoad). SSH can be compared with the similarly-named SSH variable in previous ECCO products that did not include atmospheric pressure loading (e.g., Version 4 Release 3). Use SSHNOIBC for comparisons with altimetry data products that do NOT apply the IB correction.

- valid_min :

- -1.8805772066116333

- valid_max :

- 1.4207719564437866

Array Chunk Bytes 4.82 MiB 411.33 kiB Shape (12, 13, 90, 90) (1, 13, 90, 90) Count 36 Tasks 12 Chunks Type float32 numpy.ndarray - SSHIBC(time, tile, j, i)float32dask.array<chunksize=(1, 13, 90, 90), meta=np.ndarray>

- long_name :

- The inverted barometer (IB) correction to sea surface height due to atmospheric pressure loading

- units :

- m

- coverage_content_type :

- modelResult

- comment :

- Not an SSH itself, but a correction to model sea level anomaly (ETAN) required to account for the static part of sea surface displacement by atmosphere pressure loading: SSH = SSHNOIBC - SSHIBC. Note: Use SSH for model-data comparisons with altimetry data products that DO apply the IB correction and SSHNOIBC for comparisons with altimetry data products that do NOT apply the IB correction.

- valid_min :

- -0.30144819617271423

- valid_max :

- 0.5248842239379883

Array Chunk Bytes 4.82 MiB 411.33 kiB Shape (12, 13, 90, 90) (1, 13, 90, 90) Count 36 Tasks 12 Chunks Type float32 numpy.ndarray - SSHNOIBC(time, tile, j, i)float32dask.array<chunksize=(1, 13, 90, 90), meta=np.ndarray>

- long_name :

- Sea surface height anomaly without the inverted barometer (IB) correction

- units :

- m

- coverage_content_type :

- modelResult

- comment :

- Sea surface height anomaly above the geoid without the inverse barometer (IB) correction, suitable for comparisons with altimetry sea surface height data products that do NOT apply the inverse barometer (IB) correction. Note: SSHNOIBC is calculated by correcting model sea level anomaly ETAN for two effects: a) global mean steric sea level changes related to density changes in the Boussinesq volume-conserving model (Greatbatch correction, see sterGloH), b) sea level displacement due to sea-ice and snow pressure loading (see sIceLoad). In ECCO Version 4 Release 4 the model is forced with atmospheric pressure loading. SSHNOIBC does not correct for the static part of the effect of atmosphere pressure loading on sea surface height (the so-called inverse barometer (IB) correction). Use SSH for comparisons with altimetry data products that DO apply the IB correction.

- valid_min :

- -1.6654272079467773

- valid_max :

- 1.4550364017486572

Array Chunk Bytes 4.82 MiB 411.33 kiB Shape (12, 13, 90, 90) (1, 13, 90, 90) Count 36 Tasks 12 Chunks Type float32 numpy.ndarray - ETAN(time, tile, j, i)float32dask.array<chunksize=(1, 13, 90, 90), meta=np.ndarray>

- long_name :

- Model sea level anomaly

- units :

- m

- coverage_content_type :

- modelResult

- comment :

- Model sea level anomaly WITHOUT corrections for global mean density (steric) changes, inverted barometer effect, or volume displacement due to submerged sea-ice and snow . Note: ETAN should NOT be used for comparisons with altimetry data products because ETAN is NOT corrected for (a) global mean steric sea level changes related to density changes in the Boussinesq volume-conserving model (Greatbatch correction, see sterGloH) nor (b) sea level displacement due to submerged sea-ice and snow (see sIceLoad). These corrections ARE made for the variables SSH and SSHNOIBC.

- valid_min :

- -8.304216384887695

- valid_max :

- 1.460192084312439

Array Chunk Bytes 4.82 MiB 411.33 kiB Shape (12, 13, 90, 90) (1, 13, 90, 90) Count 36 Tasks 12 Chunks Type float32 numpy.ndarray

- acknowledgement :

- This research was carried out by the Jet Propulsion Laboratory, managed by the California Institute of Technology under a contract with the National Aeronautics and Space Administration.

- author :

- Ian Fenty and Ou Wang

- cdm_data_type :

- Grid

- comment :

- Fields provided on the curvilinear lat-lon-cap 90 (llc90) native grid used in the ECCO model. SSH (dynamic sea surface height) = SSHNOIBC (dynamic sea surface without the inverse barometer correction) - SSHIBC (inverse barometer correction). The inverted barometer correction accounts for variations in sea surface height due to atmospheric pressure variations. Note: ETAN is model sea level anomaly and should not be compared with satellite altimetery products, see SSH and ETAN for more details.

- Conventions :

- CF-1.8, ACDD-1.3

- coordinates_comment :

- Note: the global 'coordinates' attribute describes auxillary coordinates.

- creator_email :

- ecco-group@mit.edu

- creator_institution :

- NASA Jet Propulsion Laboratory (JPL)

- creator_name :

- ECCO Consortium

- creator_type :

- group

- creator_url :

- https://ecco-group.org

- date_created :

- 2020-12-16T18:07:46

- date_issued :

- 2020-12-16T18:07:46

- date_metadata_modified :

- 2021-03-15T21:54:47

- date_modified :

- 2021-03-15T21:54:47

- geospatial_bounds_crs :

- EPSG:4326

- geospatial_lat_max :

- 90.0

- geospatial_lat_min :

- -90.0

- geospatial_lat_resolution :

- variable

- geospatial_lat_units :

- degrees_north

- geospatial_lon_max :

- 180.0

- geospatial_lon_min :

- -180.0

- geospatial_lon_resolution :

- variable

- geospatial_lon_units :

- degrees_east

- history :

- Inaugural release of an ECCO Central Estimate solution to PO.DAAC

- id :

- 10.5067/ECL5M-SSH44

- institution :

- NASA Jet Propulsion Laboratory (JPL)

- instrument_vocabulary :

- GCMD instrument keywords

- keywords :

- EARTH SCIENCE > OCEANS > SEA SURFACE TOPOGRAPHY > SEA SURFACE HEIGHT, EARTH SCIENCE SERVICES > MODELS > EARTH SCIENCE REANALYSES/ASSIMILATION MODELS

- keywords_vocabulary :

- NASA Global Change Master Directory (GCMD) Science Keywords

- license :

- Public Domain

- metadata_link :

- https://cmr.earthdata.nasa.gov/search/collections.umm_json?ShortName=ECCO_L4_SSH_LLC0090GRID_MONTHLY_V4R4

- naming_authority :

- gov.nasa.jpl

- platform :

- ERS-1/2, TOPEX/Poseidon, Geosat Follow-On (GFO), ENVISAT, Jason-1, Jason-2, CryoSat-2, SARAL/AltiKa, Jason-3, AVHRR, Aquarius, SSM/I, SSMIS, GRACE, DTU17MDT, Argo, WOCE, GO-SHIP, MEOP, Ice Tethered Profilers (ITP)

- platform_vocabulary :

- GCMD platform keywords

- processing_level :

- L4

- product_name :

- SEA_SURFACE_HEIGHT_mon_mean_2000-01_ECCO_V4r4_native_llc0090.nc

- product_time_coverage_end :

- 2018-01-01T00:00:00

- product_time_coverage_start :

- 1992-01-01T12:00:00

- product_version :

- Version 4, Release 4

- program :

- NASA Physical Oceanography, Cryosphere, Modeling, Analysis, and Prediction (MAP)

- project :

- Estimating the Circulation and Climate of the Ocean (ECCO)

- publisher_email :

- podaac@podaac.jpl.nasa.gov

- publisher_institution :

- PO.DAAC

- publisher_name :

- Physical Oceanography Distributed Active Archive Center (PO.DAAC)

- publisher_type :

- institution

- publisher_url :

- https://podaac.jpl.nasa.gov

- references :

- ECCO Consortium, Fukumori, I., Wang, O., Fenty, I., Forget, G., Heimbach, P., & Ponte, R. M. 2020. Synopsis of the ECCO Central Production Global Ocean and Sea-Ice State Estimate (Version 4 Release 4). doi:10.5281/zenodo.3765928

- source :

- The ECCO V4r4 state estimate was produced by fitting a free-running solution of the MITgcm (checkpoint 66g) to satellite and in situ observational data in a least squares sense using the adjoint method

- standard_name_vocabulary :

- NetCDF Climate and Forecast (CF) Metadata Convention

- summary :

- This dataset provides monthly-averaged dynamic sea surface height and model sea level anomaly on the lat-lon-cap 90 (llc90) native model grid from the ECCO Version 4 Release 4 (V4r4) ocean and sea-ice state estimate. Estimating the Circulation and Climate of the Ocean (ECCO) state estimates are dynamically and kinematically-consistent reconstructions of the three-dimensional, time-evolving ocean, sea-ice, and surface atmospheric states. ECCO V4r4 is a free-running solution of a global, nominally 1-degree configuration of the MIT general circulation model (MITgcm) that has been fit to observations in a least-squares sense. Observational data constraints used in V4r4 include sea surface height (SSH) from satellite altimeters [ERS-1/2, TOPEX/Poseidon, GFO, ENVISAT, Jason-1,2,3, CryoSat-2, and SARAL/AltiKa]; sea surface temperature (SST) from satellite radiometers [AVHRR], sea surface salinity (SSS) from the Aquarius satellite radiometer/scatterometer, ocean bottom pressure (OBP) from the GRACE satellite gravimeter; sea-ice concentration from satellite radiometers [SSM/I and SSMIS], and in-situ ocean temperature and salinity measured with conductivity-temperature-depth (CTD) sensors and expendable bathythermographs (XBTs) from several programs [e.g., WOCE, GO-SHIP, Argo, and others] and platforms [e.g., research vessels, gliders, moorings, ice-tethered profilers, and instrumented pinnipeds]. V4r4 covers the period 1992-01-01T12:00:00 to 2018-01-01T00:00:00.

- time_coverage_duration :

- P1M

- time_coverage_end :

- 2000-02-01T00:00:00

- time_coverage_resolution :

- P1M

- time_coverage_start :

- 2000-01-01T00:00:00

- title :

- ECCO Sea Surface Height - Monthly Mean llc90 Grid (Version 4 Release 4)

- uuid :

- a7c2a1c4-400c-11eb-9f79-0cc47a3f49c3

Note that there are four data variables in these files, but perhaps we only need one, the “dynamic sea surface height anomaly” (SSH). The function ecco_podaac_download_subset can be used to download only that data variable (along with the dimension and coordinate information).

Furthermore, we only need to look at one region, the North Atlantic. So most likely we don’t need the entire 13-tile global domain of ECCO–but what tiles do we need? Let’s use a simple function to find out. Note: you need the ECCO native grid file downloaded for the script below; if you don’t have it downloaded yet, use the code commented out at the top.

# # Download ECCO native grid file

# ea.ecco_podaac_download(ShortName='ECCO_L4_GEOMETRY_LLC0090GRID_V4R4',StartDate='1992-01-01',EndDate='2017-12-31')

import numpy as np

import xarray as xr

from os.path import join,expanduser

# assumes grid file is in directory ~/Downloads/ECCO_V4r4_PODAAC/ECCO_L4_GEOMETRY_LLC0090GRID_V4R4/

# change if your grid file location is different

grid_file_path = join(expanduser('~'),'Downloads','ECCO_V4r4_PODAAC',\

'ECCO_L4_GEOMETRY_LLC0090GRID_V4R4',\

'GRID_GEOMETRY_ECCO_V4r4_native_llc0090.nc')

ds_grid = xr.open_dataset(grid_file_path)

# find llc90 tiles in given bounding box

def llc90_tiles_find(ds_grid,latsouth,latnorth,longwest,longeast):

lat_llc90 = ds_grid.YC.values

lon_llc90 = ds_grid.XC.values

cells_in_box = np.logical_and(np.logical_and(lat_llc90 >= latsouth,lat_llc90 <= latnorth),\

((lon_llc90 - longwest - 1.e-5) % 360) <= (longeast - longwest - 1.e-5) % 360)

cells_in_box_tile_ind = cells_in_box.nonzero()[0]

tiles_in_box = np.unique(cells_in_box_tile_ind)

return tiles_in_box

# find tiles in North Atlantic

longwest = -80

longeast = 10

latsouth = 20

latnorth = 60

tiles_NAtl = llc90_tiles_find(ds_grid,latsouth,latnorth,longwest,longeast)

print('North Atlantic tiles: '+str(tiles_NAtl))

North Atlantic tiles: [ 2 10]

Seeing that the identified region is contained in tiles 2 and 10, we only need to download those two tiles. Let’s repeat the SSH download above using ecco_podaac_download_subset to select for the data variable SSH and the tiles 2 and 10.

Because of OPeNDAP syntax we need to express the selected tiles as a range [2,13,8], with a “start” of 2 and a “stride” of 8; the “end” can be any integer greater than 10, but no larger than 18.

ea.ecco_podaac_download_subset(ShortName='ECCO_L4_SSH_LLC0090GRID_MONTHLY_V4R4',\

StartDate='2000-01',EndDate='2000-12',\

vars_to_include=['SSH'],\

tile_isel=[2,13,8],\

subset_file_id='SSHonly_NAtl')

Download to directory C:\Users\adelman\Downloads\ECCO_V4r4_PODAAC\ECCO_L4_SSH_LLC0090GRID_MONTHLY_V4R4

Please wait while program searches for the granules ...

Total number of matching granules: 12

DL Progress: 100%|#########################| 12/12 [00:40<00:00, 3.37s/it]

=====================================

total downloaded: 3.21 Mb

avg download speed: 0.08 Mb/s

Time spent = 40.509522676467896 seconds

Note that the total download size of the subsetted files is much smaller (3 Mb instead of 71 Mb), but the download takes longer because of the subsetting that OPeNDAP does prior to the download. The subset_file_id is appended to the names of the downloaded files to distinguish them from non-subsetted files or other subsets (the default is to have no identifier).

Let’s look at the contents of the subsetted files in a xarray dataset:

ds_SSH_mon_2000_sub = xr.open_mfdataset(join(expanduser('~'),'Downloads','ECCO_V4r4_PODAAC',\

'ECCO_L4_SSH_LLC0090GRID_MONTHLY_V4R4',\

'*2000*SSHonly_NAtl.nc'))

ds_SSH_mon_2000_sub

<xarray.Dataset>

Dimensions: (time: 12, tile: 2, j: 90, i: 90, j_g: 90, i_g: 90, nb: 4, nv: 2)

Coordinates: (12/15)

XG (tile, j_g, i_g) float32 dask.array<chunksize=(2, 90, 90), meta=np.ndarray>

YC (tile, j, i) float32 dask.array<chunksize=(2, 90, 90), meta=np.ndarray>

XC (tile, j, i) float32 dask.array<chunksize=(2, 90, 90), meta=np.ndarray>

YG (tile, j_g, i_g) float32 dask.array<chunksize=(2, 90, 90), meta=np.ndarray>

XC_bnds (tile, j, i, nb) float32 dask.array<chunksize=(2, 90, 90, 4), meta=np.ndarray>

YC_bnds (tile, j, i, nb) float32 dask.array<chunksize=(2, 90, 90, 4), meta=np.ndarray>

... ...

* j (j) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* j_g (j_g) int32 0 1 2 3 4 5 6 7 8 9 ... 80 81 82 83 84 85 86 87 88 89

* nb (nb) float32 0.0 1.0 2.0 3.0

* nv (nv) float32 0.0 1.0

* tile (tile) int32 2 10

* time (time) datetime64[ns] 2000-01-16T12:00:00 ... 2000-12-16T12:00:00

Data variables:

SSH (time, tile, j, i) float32 dask.array<chunksize=(1, 2, 90, 90), meta=np.ndarray>

Attributes: (12/58)

acknowledgement: This research was carried out by the Jet Pr...

author: Ian Fenty and Ou Wang

cdm_data_type: Grid

comment: Fields provided on the curvilinear lat-lon-...

Conventions: CF-1.8, ACDD-1.3

coordinates_comment: Note: the global 'coordinates' attribute de...

... ...

time_coverage_end: 2000-02-01T00:00:00

time_coverage_resolution: P1M

time_coverage_start: 2000-01-01T00:00:00

title: ECCO Sea Surface Height - Monthly Mean llc9...

uuid: a7c2a1c4-400c-11eb-9f79-0cc47a3f49c3

history_json: [{"$schema":"https:\/\/harmony.earthdata.na...- time: 12

- tile: 2

- j: 90

- i: 90

- j_g: 90

- i_g: 90

- nb: 4

- nv: 2

- XG(tile, j_g, i_g)float32dask.array<chunksize=(2, 90, 90), meta=np.ndarray>

- long_name :

- longitude of 'southwest' corner of tracer grid cell

- units :

- degrees_east

- coordinate :

- YG XG

- comment :

- Nonuniform grid spacing. Note: 'southwest' does not correspond to geographic orientation but is used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- standard_name :

- longitude

- origname :

- XG

- fullnamepath :

- /XG

Array Chunk Bytes 63.28 kiB 63.28 kiB Shape (2, 90, 90) (2, 90, 90) Count 55 Tasks 1 Chunks Type float32 numpy.ndarray - YC(tile, j, i)float32dask.array<chunksize=(2, 90, 90), meta=np.ndarray>

- long_name :

- latitude of tracer grid cell center

- units :

- degrees_north

- coordinate :

- YC XC

- bounds :

- YC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- latitude

- origname :

- YC

- fullnamepath :

- /YC

Array Chunk Bytes 63.28 kiB 63.28 kiB Shape (2, 90, 90) (2, 90, 90) Count 55 Tasks 1 Chunks Type float32 numpy.ndarray - XC(tile, j, i)float32dask.array<chunksize=(2, 90, 90), meta=np.ndarray>

- long_name :

- longitude of tracer grid cell center

- units :

- degrees_east

- coordinate :

- YC XC

- bounds :

- XC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- longitude

- origname :

- XC

- fullnamepath :

- /XC

Array Chunk Bytes 63.28 kiB 63.28 kiB Shape (2, 90, 90) (2, 90, 90) Count 55 Tasks 1 Chunks Type float32 numpy.ndarray - YG(tile, j_g, i_g)float32dask.array<chunksize=(2, 90, 90), meta=np.ndarray>

- long_name :

- latitude of 'southwest' corner of tracer grid cell

- units :

- degrees_north

- coordinate :

- YG XG

- comment :

- Nonuniform grid spacing. Note: 'southwest' does not correspond to geographic orientation but is used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- standard_name :

- latitude

- origname :

- YG

- fullnamepath :

- /YG

Array Chunk Bytes 63.28 kiB 63.28 kiB Shape (2, 90, 90) (2, 90, 90) Count 55 Tasks 1 Chunks Type float32 numpy.ndarray - XC_bnds(tile, j, i, nb)float32dask.array<chunksize=(2, 90, 90, 4), meta=np.ndarray>

- comment :

- Bounds array follows CF conventions. XC_bnds[i,j,0] = 'southwest' corner (j-1, i-1), XC_bnds[i,j,1] = 'southeast' corner (j-1, i+1), XC_bnds[i,j,2] = 'northeast' corner (j+1, i+1), XC_bnds[i,j,3] = 'northwest' corner (j+1, i-1). Note: 'southwest', 'southeast', northwest', and 'northeast' do not correspond to geographic orientation but are used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- long_name :

- longitudes of tracer grid cell corners

- origname :

- XC_bnds

- fullnamepath :

- /XC_bnds

Array Chunk Bytes 253.12 kiB 253.12 kiB Shape (2, 90, 90, 4) (2, 90, 90, 4) Count 55 Tasks 1 Chunks Type float32 numpy.ndarray - YC_bnds(tile, j, i, nb)float32dask.array<chunksize=(2, 90, 90, 4), meta=np.ndarray>

- comment :

- Bounds array follows CF conventions. YC_bnds[i,j,0] = 'southwest' corner (j-1, i-1), YC_bnds[i,j,1] = 'southeast' corner (j-1, i+1), YC_bnds[i,j,2] = 'northeast' corner (j+1, i+1), YC_bnds[i,j,3] = 'northwest' corner (j+1, i-1). Note: 'southwest', 'southeast', northwest', and 'northeast' do not correspond to geographic orientation but are used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- long_name :

- latitudes of tracer grid cell corners

- origname :

- YC_bnds

- fullnamepath :

- /YC_bnds

Array Chunk Bytes 253.12 kiB 253.12 kiB Shape (2, 90, 90, 4) (2, 90, 90, 4) Count 55 Tasks 1 Chunks Type float32 numpy.ndarray - time_bnds(time, nv)datetime64[ns]dask.array<chunksize=(1, 2), meta=np.ndarray>

- comment :

- Start and end times of averaging period.

- coverage_content_type :

- coordinate

- long_name :

- time bounds of averaging period

- origname :

- time_bnds

- fullnamepath :

- /time_bnds

Array Chunk Bytes 192 B 16 B Shape (12, 2) (1, 2) Count 36 Tasks 12 Chunks Type datetime64[ns] numpy.ndarray - i(i)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- X

- long_name :

- grid index in x for variables at tracer and 'v' locations

- swap_dim :

- XC

- comment :

- In the Arakawa C-grid system, tracer (e.g., THETA) and 'v' variables (e.g., VVEL) have the same x coordinate on the model grid.

- coverage_content_type :

- coordinate

- origname :

- i

- fullnamepath :

- /i

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - i_g(i_g)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- X

- long_name :

- grid index in x for variables at 'u' and 'g' locations

- c_grid_axis_shift :

- -0.5

- swap_dim :

- XG

- comment :

- In the Arakawa C-grid system, 'u' (e.g., UVEL) and 'g' variables (e.g., XG) have the same x coordinate on the model grid.

- coverage_content_type :

- coordinate

- origname :

- i_g

- fullnamepath :

- /i_g

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - j(j)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- Y

- long_name :

- grid index in y for variables at tracer and 'u' locations

- swap_dim :

- YC

- comment :

- In the Arakawa C-grid system, tracer (e.g., THETA) and 'u' variables (e.g., UVEL) have the same y coordinate on the model grid.

- coverage_content_type :

- coordinate

- origname :

- j

- fullnamepath :

- /j

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - j_g(j_g)int320 1 2 3 4 5 6 ... 84 85 86 87 88 89

- axis :

- Y

- long_name :

- grid index in y for variables at 'v' and 'g' locations

- c_grid_axis_shift :

- -0.5

- swap_dim :

- YG

- comment :

- In the Arakawa C-grid system, 'v' (e.g., VVEL) and 'g' variables (e.g., XG) have the same y coordinate.

- coverage_content_type :

- coordinate

- origname :

- j_g

- fullnamepath :

- /j_g

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89]) - nb(nb)float320.0 1.0 2.0 3.0

array([0., 1., 2., 3.], dtype=float32)

- nv(nv)float320.0 1.0

array([0., 1.], dtype=float32)

- tile(tile)int322 10

- long_name :

- lat-lon-cap tile index

- comment :

- The ECCO V4 horizontal model grid is divided into 13 tiles of 90x90 cells for convenience.

- coverage_content_type :

- coordinate

- origname :

- tile

- fullnamepath :

- /tile

array([ 2, 10])

- time(time)datetime64[ns]2000-01-16T12:00:00 ... 2000-12-...

- long_name :

- center time of averaging period

- axis :

- T

- bounds :

- time_bnds

- coverage_content_type :

- coordinate

- standard_name :

- time

- origname :

- time

- fullnamepath :

- /time

array(['2000-01-16T12:00:00.000000000', '2000-02-15T12:00:00.000000000', '2000-03-16T12:00:00.000000000', '2000-04-16T00:00:00.000000000', '2000-05-16T12:00:00.000000000', '2000-06-16T00:00:00.000000000', '2000-07-16T12:00:00.000000000', '2000-08-16T12:00:00.000000000', '2000-09-16T00:00:00.000000000', '2000-10-16T12:00:00.000000000', '2000-11-16T00:00:00.000000000', '2000-12-16T12:00:00.000000000'], dtype='datetime64[ns]')

- SSH(time, tile, j, i)float32dask.array<chunksize=(1, 2, 90, 90), meta=np.ndarray>

- long_name :

- Dynamic sea surface height anomaly

- units :

- m

- coverage_content_type :

- modelResult

- standard_name :

- sea_surface_height_above_geoid

- comment :

- Dynamic sea surface height anomaly above the geoid, suitable for comparisons with altimetry sea surface height data products that apply the inverse barometer (IB) correction. Note: SSH is calculated by correcting model sea level anomaly ETAN for three effects: a) global mean steric sea level changes related to density changes in the Boussinesq volume-conserving model (Greatbatch correction, see sterGloH), b) the inverted barometer (IB) effect (see SSHIBC) and c) sea level displacement due to sea-ice and snow pressure loading (see sIceLoad). SSH can be compared with the similarly-named SSH variable in previous ECCO products that did not include atmospheric pressure loading (e.g., Version 4 Release 3). Use SSHNOIBC for comparisons with altimetry data products that do NOT apply the IB correction.

- valid_min :

- -1.8805772066116333

- valid_max :

- 1.4207719564437866

- origname :

- SSH

- fullnamepath :

- /SSH

Array Chunk Bytes 759.38 kiB 63.28 kiB Shape (12, 2, 90, 90) (1, 2, 90, 90) Count 36 Tasks 12 Chunks Type float32 numpy.ndarray

- acknowledgement :

- This research was carried out by the Jet Propulsion Laboratory, managed by the California Institute of Technology under a contract with the National Aeronautics and Space Administration.

- author :

- Ian Fenty and Ou Wang

- cdm_data_type :

- Grid

- comment :

- Fields provided on the curvilinear lat-lon-cap 90 (llc90) native grid used in the ECCO model. SSH (dynamic sea surface height) = SSHNOIBC (dynamic sea surface without the inverse barometer correction) - SSHIBC (inverse barometer correction). The inverted barometer correction accounts for variations in sea surface height due to atmospheric pressure variations. Note: ETAN is model sea level anomaly and should not be compared with satellite altimetery products, see SSH and ETAN for more details.

- Conventions :

- CF-1.8, ACDD-1.3

- coordinates_comment :

- Note: the global 'coordinates' attribute describes auxillary coordinates.

- creator_email :

- ecco-group@mit.edu

- creator_institution :

- NASA Jet Propulsion Laboratory (JPL)

- creator_name :

- ECCO Consortium

- creator_type :

- group

- creator_url :

- https://ecco-group.org

- date_created :

- 2020-12-16T18:07:46

- date_issued :

- 2020-12-16T18:07:46

- date_metadata_modified :

- 2021-03-15T21:54:47

- date_modified :

- 2021-03-15T21:54:47

- geospatial_bounds_crs :

- EPSG:4326

- geospatial_lat_max :

- 90.0

- geospatial_lat_min :

- -90.0

- geospatial_lat_resolution :

- variable

- geospatial_lat_units :

- degrees_north

- geospatial_lon_max :

- 180.0

- geospatial_lon_min :

- -180.0

- geospatial_lon_resolution :

- variable

- geospatial_lon_units :

- degrees_east

- history :

- Inaugural release of an ECCO Central Estimate solution to PO.DAAC 2023-12-22 23:04:42 GMT hyrax-1.16.8-356 https://opendap.earthdata.nasa.gov/providers/POCLOUD/collections/ECCO%2520Sea%2520Surface%2520Height%2520-%2520Monthly%2520Mean%2520llc90%2520Grid%2520(Version%25204%2520Release%25204)/granules/SEA_SURFACE_HEIGHT_mon_mean_2000-01_ECCO_V4r4_native_llc0090.dap.nc4?dap4.ce=/SSH%5B0:1:0%5D%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D;/XG%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D;/YC%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D;/XC%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D;/YG%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D;/XC_bnds%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D%5B0:1:3%5D;/YC_bnds%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D%5B0:1:3%5D;/time_bnds%5B0:1:0%5D%5B0:1:1%5D;/i%5B0:1:89%5D;/i_g%5B0:1:89%5D;/j%5B0:1:89%5D;/j_g%5B0:1:89%5D;/nb%5B0:1:3%5D;/nv%5B0:1:1%5D;/tile%5B2:8:10%5D;/time%5B0:1:0%5D

- id :

- 10.5067/ECL5M-SSH44

- institution :

- NASA Jet Propulsion Laboratory (JPL)

- instrument_vocabulary :

- GCMD instrument keywords

- keywords :

- EARTH SCIENCE > OCEANS > SEA SURFACE TOPOGRAPHY > SEA SURFACE HEIGHT, EARTH SCIENCE SERVICES > MODELS > EARTH SCIENCE REANALYSES/ASSIMILATION MODELS

- keywords_vocabulary :

- NASA Global Change Master Directory (GCMD) Science Keywords

- license :

- Public Domain

- metadata_link :

- https://cmr.earthdata.nasa.gov/search/collections.umm_json?ShortName=ECCO_L4_SSH_LLC0090GRID_MONTHLY_V4R4

- naming_authority :

- gov.nasa.jpl

- platform :

- ERS-1/2, TOPEX/Poseidon, Geosat Follow-On (GFO), ENVISAT, Jason-1, Jason-2, CryoSat-2, SARAL/AltiKa, Jason-3, AVHRR, Aquarius, SSM/I, SSMIS, GRACE, DTU17MDT, Argo, WOCE, GO-SHIP, MEOP, Ice Tethered Profilers (ITP)

- platform_vocabulary :

- GCMD platform keywords

- processing_level :

- L4

- product_name :

- SEA_SURFACE_HEIGHT_mon_mean_2000-01_ECCO_V4r4_native_llc0090.nc

- product_time_coverage_end :

- 2018-01-01T00:00:00

- product_time_coverage_start :

- 1992-01-01T12:00:00

- product_version :

- Version 4, Release 4

- program :

- NASA Physical Oceanography, Cryosphere, Modeling, Analysis, and Prediction (MAP)

- project :

- Estimating the Circulation and Climate of the Ocean (ECCO)

- publisher_email :

- podaac@podaac.jpl.nasa.gov

- publisher_institution :

- PO.DAAC

- publisher_name :

- Physical Oceanography Distributed Active Archive Center (PO.DAAC)

- publisher_type :

- institution

- publisher_url :

- https://podaac.jpl.nasa.gov

- references :

- ECCO Consortium, Fukumori, I., Wang, O., Fenty, I., Forget, G., Heimbach, P., & Ponte, R. M. 2020. Synopsis of the ECCO Central Production Global Ocean and Sea-Ice State Estimate (Version 4 Release 4). doi:10.5281/zenodo.3765928

- source :

- The ECCO V4r4 state estimate was produced by fitting a free-running solution of the MITgcm (checkpoint 66g) to satellite and in situ observational data in a least squares sense using the adjoint method

- standard_name_vocabulary :

- NetCDF Climate and Forecast (CF) Metadata Convention

- summary :

- This dataset provides monthly-averaged dynamic sea surface height and model sea level anomaly on the lat-lon-cap 90 (llc90) native model grid from the ECCO Version 4 Release 4 (V4r4) ocean and sea-ice state estimate. Estimating the Circulation and Climate of the Ocean (ECCO) state estimates are dynamically and kinematically-consistent reconstructions of the three-dimensional, time-evolving ocean, sea-ice, and surface atmospheric states. ECCO V4r4 is a free-running solution of a global, nominally 1-degree configuration of the MIT general circulation model (MITgcm) that has been fit to observations in a least-squares sense. Observational data constraints used in V4r4 include sea surface height (SSH) from satellite altimeters [ERS-1/2, TOPEX/Poseidon, GFO, ENVISAT, Jason-1,2,3, CryoSat-2, and SARAL/AltiKa]; sea surface temperature (SST) from satellite radiometers [AVHRR], sea surface salinity (SSS) from the Aquarius satellite radiometer/scatterometer, ocean bottom pressure (OBP) from the GRACE satellite gravimeter; sea-ice concentration from satellite radiometers [SSM/I and SSMIS], and in-situ ocean temperature and salinity measured with conductivity-temperature-depth (CTD) sensors and expendable bathythermographs (XBTs) from several programs [e.g., WOCE, GO-SHIP, Argo, and others] and platforms [e.g., research vessels, gliders, moorings, ice-tethered profilers, and instrumented pinnipeds]. V4r4 covers the period 1992-01-01T12:00:00 to 2018-01-01T00:00:00.

- time_coverage_duration :

- P1M

- time_coverage_end :

- 2000-02-01T00:00:00

- time_coverage_resolution :

- P1M

- time_coverage_start :

- 2000-01-01T00:00:00

- title :

- ECCO Sea Surface Height - Monthly Mean llc90 Grid (Version 4 Release 4)

- uuid :

- a7c2a1c4-400c-11eb-9f79-0cc47a3f49c3

- history_json :

- [{"$schema":"https:\/\/harmony.earthdata.nasa.gov\/schemas\/history\/0.1.0\/history-0.1.0.json","date_time":"2023-12-22T23:04:42.240+0000","program":"hyrax","version":"1.16.8-356","parameters":[{"request_url":"https:\/\/opendap.earthdata.nasa.gov\/providers\/POCLOUD\/collections\/ECCO%2520Sea%2520Surface%2520Height%2520-%2520Monthly%2520Mean%2520llc90%2520Grid%2520(Version%25204%2520Release%25204)\/granules\/SEA_SURFACE_HEIGHT_mon_mean_2000-01_ECCO_V4r4_native_llc0090.dap.nc4?dap4.ce=\/SSH%5B0:1:0%5D%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D;\/XG%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D;\/YC%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D;\/XC%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D;\/YG%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D;\/XC_bnds%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D%5B0:1:3%5D;\/YC_bnds%5B2:8:10%5D%5B0:1:89%5D%5B0:1:89%5D%5B0:1:3%5D;\/time_bnds%5B0:1:0%5D%5B0:1:1%5D;\/i%5B0:1:89%5D;\/i_g%5B0:1:89%5D;\/j%5B0:1:89%5D;\/j_g%5B0:1:89%5D;\/nb%5B0:1:3%5D;\/nv%5B0:1:1%5D;\/tile%5B2:8:10%5D;\/time%5B0:1:0%5D"},{"decoded_constraint":"dap4.ce=\/SSH[0:1:0][2:8:10][0:1:89][0:1:89];\/XG[2:8:10][0:1:89][0:1:89];\/YC[2:8:10][0:1:89][0:1:89];\/XC[2:8:10][0:1:89][0:1:89];\/YG[2:8:10][0:1:89][0:1:89];\/XC_bnds[2:8:10][0:1:89][0:1:89][0:1:3];\/YC_bnds[2:8:10][0:1:89][0:1:89][0:1:3];\/time_bnds[0:1:0][0:1:1];\/i[0:1:89];\/i_g[0:1:89];\/j[0:1:89];\/j_g[0:1:89];\/nb[0:1:3];\/nv[0:1:1];\/tile[2:8:10];\/time[0:1:0]"}]}]

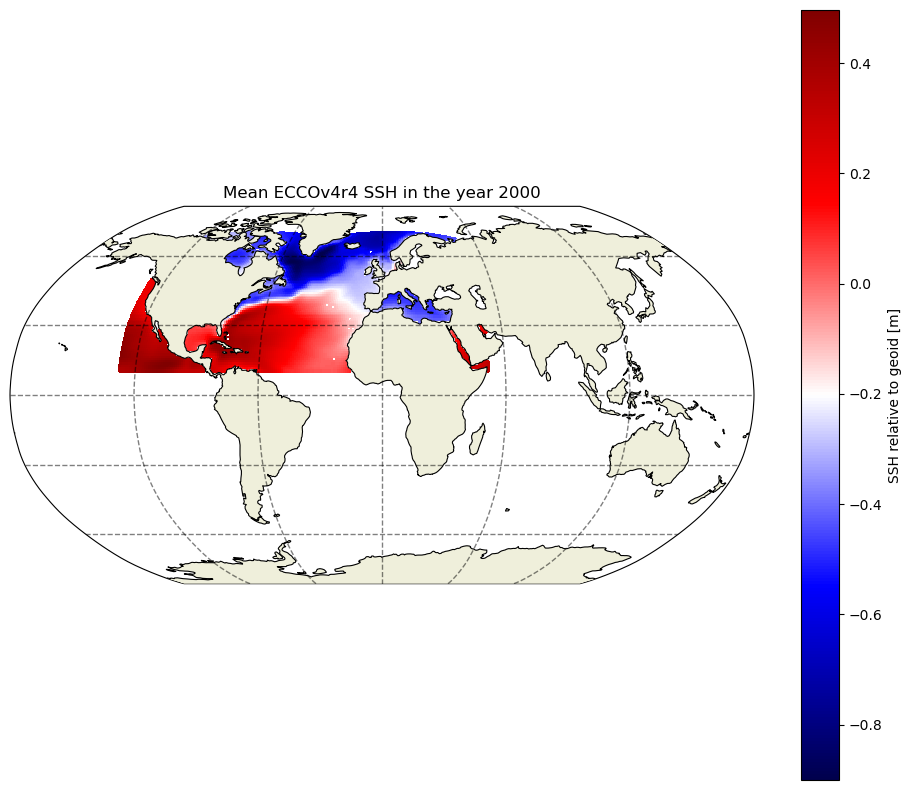

Looks similar to the previous non-subsetted dataset, but now there are only 2 tiles and one data variable. Let’s map the mean SSH (relative to the geoid) for the year 2000 in the two tiles. For the quickest view, we can use the plot_proj_to_latlon_grid function that is part of the ecco_v4_py package.

import ecco_v4_py as ecco

import matplotlib.pyplot as plt

plt.figure(num=0,figsize=(12,10))

ecco.plot_proj_to_latlon_grid(ds_SSH_mon_2000_sub.XC.values,\

ds_SSH_mon_2000_sub.YC.values,\

np.mean(ds_SSH_mon_2000_sub.SSH.values,axis=0),\

cmap='seismic',\

show_colorbar=True,colorbar_label='SSH relative to geoid [m]')

plt.title('Mean ECCOv4r4 SSH in the year 2000')

plt.show()

-179.875 179.875

-180.0 180.0

-89.875 89.875

-90.0 90.0

C:\cygwin64\home\adelman\Anaconda3\lib\site-packages\matplotlib\colors.py:621: RuntimeWarning: overflow encountered in multiply

xa *= self.N

C:\cygwin64\home\adelman\Anaconda3\lib\site-packages\matplotlib\colors.py:621: RuntimeWarning: overflow encountered in multiply

xa *= self.N

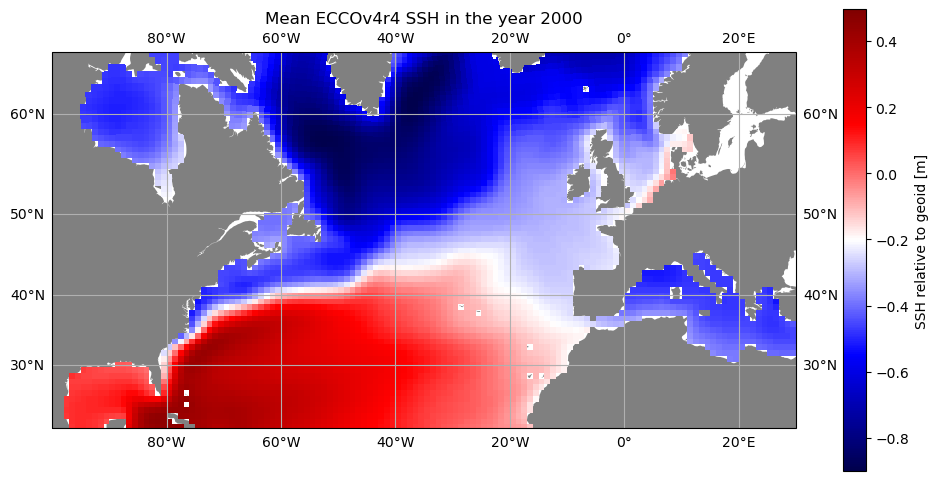

But perhaps we don’t want to plot the global domain when we only have data for part of the Northern Hemisphere. The resample_to_latlon function from the ecco_v4_py package allows us to reinterpolate the native grid output to a lat/lon grid of our choosing. Then we can use the versatile cartopy package to produce the map. This is the package that also produces the maps in the ecco.plot_proj_to_latlon_grid function, but by importing cartopy directly we can customize the map more.

help(ecco.resample_to_latlon)

Help on function resample_to_latlon in module ecco_v4_py.resample_to_latlon:

resample_to_latlon(orig_lons, orig_lats, orig_field, new_grid_min_lat, new_grid_max_lat, new_grid_delta_lat, new_grid_min_lon, new_grid_max_lon, new_grid_delta_lon, radius_of_influence=120000, fill_value=None, mapping_method='bin_average')

Take a field from a source grid and interpolate to a target grid.

Parameters

----------

orig_lons, orig_lats, orig_field : xarray DataArray or numpy array :

the lons, lats, and field from the source grid

new_grid_min_lat, new_grid_max_lat : float

latitude limits of new lat-lon grid

new_grid_delta_lat : float

latitudinal extent of new lat-lon grid cells in degrees (-90..90)

new_grid_min_lon, new_grid_max_lon : float

longitude limits of new lat-lon grid (-180..180)

new_grid_delta_lon : float

longitudinal extent of new lat-lon grid cells in degrees

radius_of_influence : float, optional. Default 120000 m

the radius of the circle within which the input data is search for

when mapping to the new grid

fill_value : float, optional. Default None

value to use in the new lat-lon grid if there are no valid values

from the source grid

mapping_method : string, optional. Default 'bin_average'

denote the type of interpolation method to use.

options include

'nearest_neighbor' - Take the nearest value from the source grid

to the target grid

'bin_average' - Use the average value from the source grid

to the target grid

RETURNS:

new_grid_lon_centers, new_grid_lat_centers : ndarrays

2D arrays with the lon and lat values of the new grid cell centers

new_grid_lon_edges, new_grid_lat_edges: ndarrays

2D arrays with the lon and lat values of the new grid cell edges

data_latlon_projection:

the source field interpolated to the new grid

import cartopy

import cartopy.crs as ccrs

# resample 2000 mean SSH to lat/lon grid

lon_centers,lat_centers,\

lon_edges,lat_edges,\

SSH_mean_resampled = ecco.resample_to_latlon(ds_SSH_mon_2000_sub.XC.values,\

ds_SSH_mon_2000_sub.YC.values,\

np.mean(ds_SSH_mon_2000_sub.SSH.values,axis=0),\

new_grid_min_lat=10,\

new_grid_max_lat=70,\

new_grid_delta_lat=0.1,\

new_grid_min_lon=-130,\

new_grid_max_lon=50,\

new_grid_delta_lon=0.1,\

mapping_method='nearest_neighbor')

# plot with orthographic projection: view from directly overhead 40 W, 40 N

fig,ax = plt.subplots(1,1,figsize=(12,10),\

subplot_kw={'projection':ccrs.Mercator(latitude_true_scale=40)})

curr_plot = ax.pcolormesh(lon_centers,lat_centers,\

SSH_mean_resampled,\

transform=ccrs.PlateCarree(),cmap='seismic')

ax.set_extent([-100,30,20,65],ccrs.PlateCarree())

ax.add_feature(cartopy.feature.LAND,facecolor='gray') # add shaded land areas

ax.gridlines(draw_labels=True)

plt.colorbar(curr_plot,shrink=0.6,label='SSH relative to geoid [m]')

plt.title('Mean ECCOv4r4 SSH in the year 2000')

plt.show()

-129.95 49.95

-130.0 50.0

10.05 69.95

10.0 70.0

C:\cygwin64\home\adelman\Anaconda3\lib\site-packages\matplotlib\colors.py:621: RuntimeWarning: overflow encountered in multiply

xa *= self.N

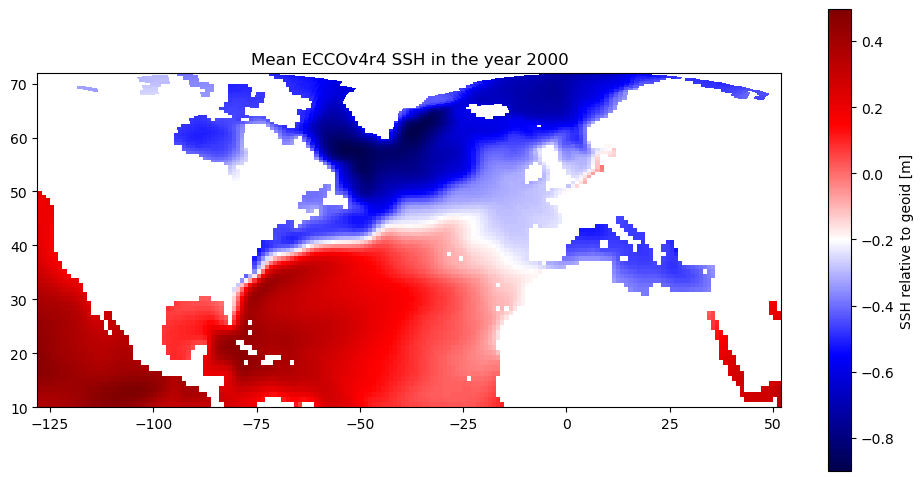

Alternatively, we can map the SSH without resampling (and maintain the integrity of the original grid) by concatenating the adjacent tiles along their shared edge to create a 180x90 map.

However…since tiles 7-12 are “rotated” tiles, we need to “unrotate” tile 10 before concatenating it with tile 2.

For more information about tiles on the native grid, see this tutorial.

import matplotlib.pyplot as plt

def unrotate_concat(array_2tiles):

unrotated_tile = np.rot90(array_2tiles[-1,:,:],k=1)

concat_array = np.concatenate((unrotated_tile,array_2tiles[0,:,:]),axis=-1)

return concat_array

plt.figure(figsize=(12,10))

curr_plot = plt.pcolormesh(unrotate_concat(ds_SSH_mon_2000_sub.XC.values),\

unrotate_concat(ds_SSH_mon_2000_sub.YC.values),\

unrotate_concat(np.mean(ds_SSH_mon_2000_sub.SSH.values,axis=0)),\

cmap='seismic')

plt.gca().set_aspect(1/np.cos((np.pi/180)*40))

plt.colorbar(curr_plot,shrink=0.6,label='SSH relative to geoid [m]')

plt.title('Mean ECCOv4r4 SSH in the year 2000')

plt.show()

C:\Users\adelman\AppData\Local\Temp\ipykernel_11700\3523494463.py:10: UserWarning: The input coordinates to pcolormesh are interpreted as cell centers, but are not monotonically increasing or decreasing. This may lead to incorrectly calculated cell edges, in which case, please supply explicit cell edges to pcolormesh.

curr_plot = plt.pcolormesh(unrotate_concat(ds_SSH_mon_2000_sub.XC.values),\

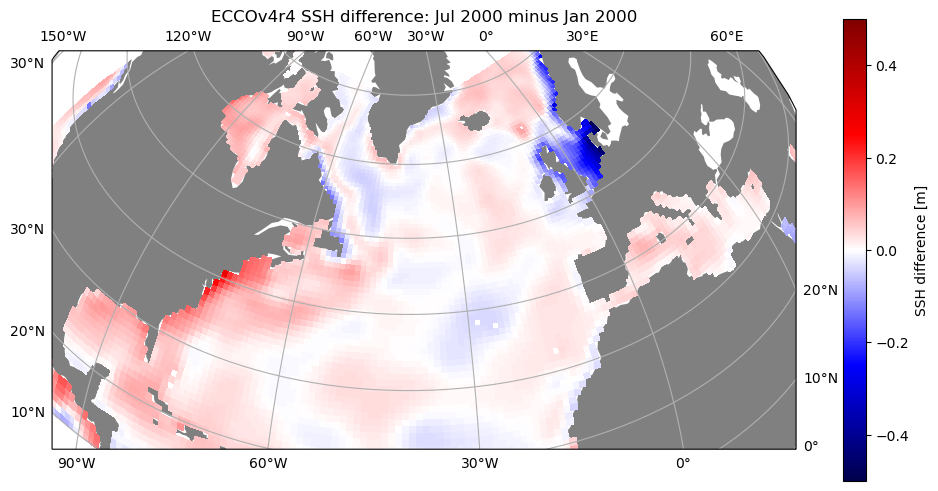

Now let’s use cartopy again to produce a map projection…and this time let’s plot the difference of SSH anomaly in July vs. January 2000.

import cartopy

import cartopy.crs as ccrs

# plot with orthographic projection: view from directly overhead 40 W, 40 N

fig,ax = plt.subplots(1,1,figsize=(12,10),\

subplot_kw={'projection':ccrs.Orthographic(central_longitude=-40,central_latitude=40)})

curr_plot = ax.pcolormesh(unrotate_concat(ds_SSH_mon_2000_sub.XC.values),\

unrotate_concat(ds_SSH_mon_2000_sub.YC.values),\

unrotate_concat(np.diff(ds_SSH_mon_2000_sub.SSH.values[[0,6],:,:,:],axis=0).squeeze()),\

transform=ccrs.PlateCarree(),cmap='seismic',vmin=-.5,vmax=.5)

ax.set_extent([-100,30,20,65],ccrs.PlateCarree())

ax.add_feature(cartopy.feature.LAND,facecolor='gray') # add shaded land areas

ax.gridlines(draw_labels=True)

plt.colorbar(curr_plot,shrink=0.6,label='SSH difference [m]')

plt.title('ECCOv4r4 SSH difference: Jul 2000 minus Jan 2000')

plt.show()

We can see that there is a seasonal increase in SSH from winter to summer in most locations (likely driven by density changes/steric height), except there is a decrease in the marginal seas of northern Europe.

Example 2: Hurricane season SST in the Gulf of Mexico#

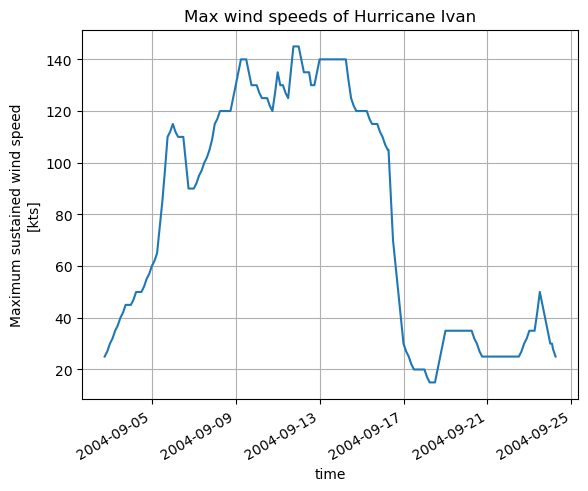

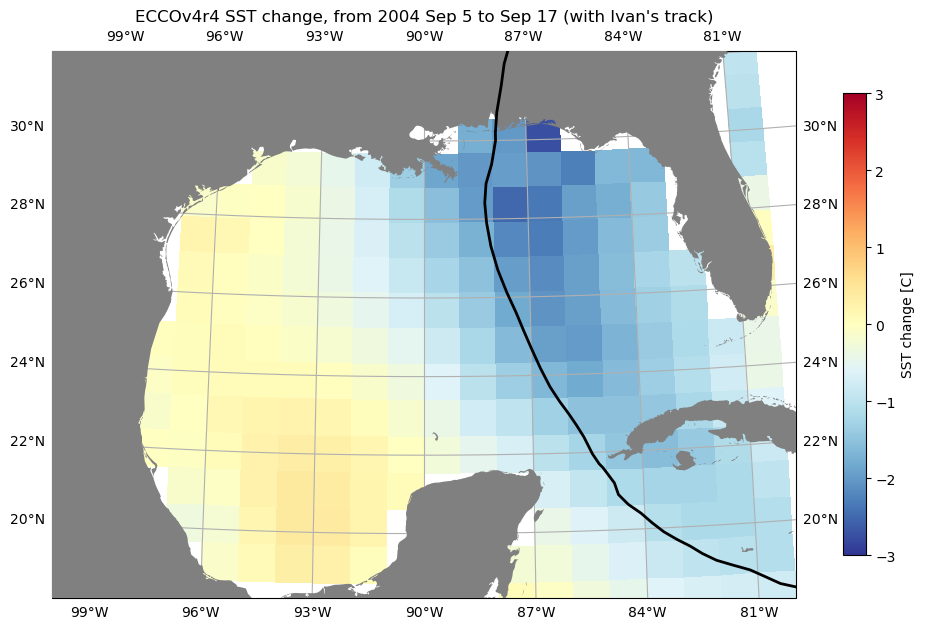

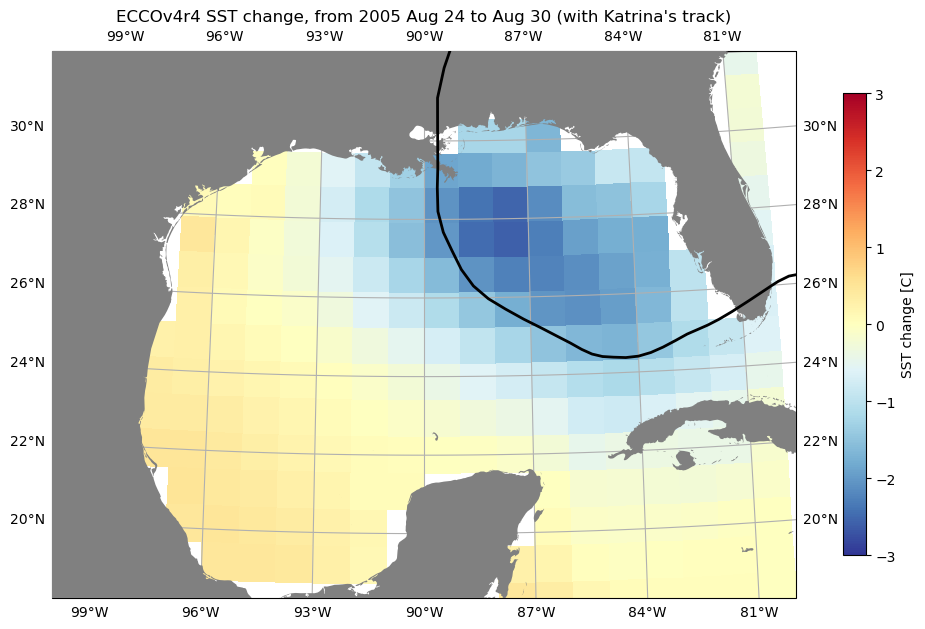

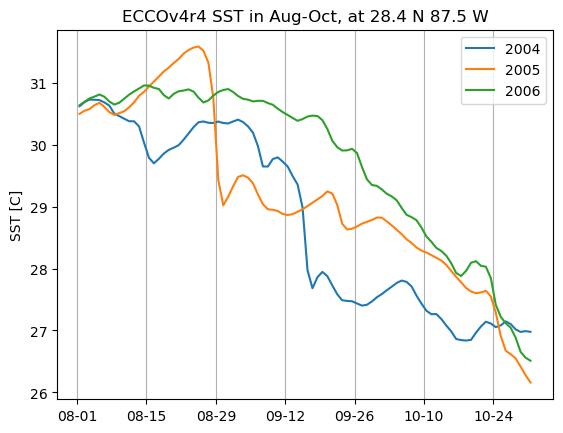

For another example, let’s download sea surface temperature (SST) in the Gulf of Mexico, in order to look at the relationship between hurricanes and SST. There is no “SST” dataset available for ECCOv4r4; what we want is the THETA variable in the top layer (upper 10 m). We will use the subset download function to retrieve the top layer THETA at daily resolution in Aug-Oct 2004-2006 (2004 and 2005 were both very active hurricane seasons in the region).

We can find the ShortName of the dataset that contains THETA here. As with the previous example, we can also identify the indices we need to subset on the native grid (in this case the region is contained in a single tile, so we can also subset by j and i.

import numpy as np

import xarray as xr

from os.path import join,expanduser

import matplotlib.pyplot as plt

# find llc90 tiles and indices in given bounding box

def llc90_tiles_indices_find(ds_grid,latsouth,latnorth,longwest,longeast):

lat_llc90 = ds_grid.YC.values

lon_llc90 = ds_grid.XC.values

cells_in_box = np.logical_and(np.logical_and(lat_llc90 >= latsouth,lat_llc90 <= latnorth),\

((lon_llc90 - longwest - 1.e-5) % 360) <= (longeast - longwest - 1.e-5) % 360)

cells_in_box_tile_ind,cells_in_box_j_ind,cells_in_box_i_ind = cells_in_box.nonzero()

tiles_in_box = np.unique(cells_in_box_tile_ind)

j_in_box = np.unique(cells_in_box_j_ind)

i_in_box = np.unique(cells_in_box_i_ind)

return tiles_in_box,j_in_box,i_in_box

# find tiles in North Atlantic

longwest = -100

longeast = -80

latsouth = 18

latnorth = 32

tiles_GoM,j_GoM,i_GoM = llc90_tiles_indices_find(ds_grid,latsouth,latnorth,longwest,longeast)

print('Gulf of Mexico tiles = '+str(tiles_GoM)+', j = '+str(j_GoM)+', i = '+str(i_GoM))

Gulf of Mexico tiles = [10], j = [28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47], i = [66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81]

ea.ecco_podaac_download_subset(ShortName='ECCO_L4_TEMP_SALINITY_LLC0090GRID_DAILY_V4R4',\

vars_to_include=['THETA'],\

times_to_include=['2004-08','2004-09','2004-10',\

'2005-08','2005-09','2005-10',\

'2006-08','2006-09','2006-10'],\

k_isel=[0,1,1],\

tile_isel=[10,11,1],\

j_isel=[28,48,1],\

i_isel=[66,82,1],\

subset_file_id='SST_GoM')

Download to directory C:\Users\adelman\Downloads\ECCO_V4r4_PODAAC\ECCO_L4_TEMP_SALINITY_LLC0090GRID_DAILY_V4R4

Please wait while program searches for the granules ...

Total number of matching granules: 276

DL Progress: 100%|#######################| 276/276 [21:12<00:00, 4.61s/it]

=====================================

total downloaded: 42.08 Mb

avg download speed: 0.03 Mb/s

Time spent = 1272.8332271575928 seconds

ds_SST_GoM = xr.open_mfdataset(join(expanduser('~'),'Downloads','ECCO_V4r4_PODAAC',\

'ECCO_L4_TEMP_SALINITY_LLC0090GRID_DAILY_V4R4',\

'*SST_GoM.nc'),\

compat='override',data_vars='minimal',coords='minimal')

# the last three options are recommended for merging a large number of individual files

ds_SST_GoM = ds_SST_GoM.compute() # .compute() loads the dataset into workspace memory

ds_SST_GoM

<xarray.Dataset>

Dimensions: (tile: 1, j_g: 20, i_g: 16, k_p1: 2, k_l: 1, j: 20, i: 16, nb: 4, k_u: 1, time: 276, k: 1, nv: 2)

Coordinates: (12/24)

XG (tile, j_g, i_g) float32 -100.0 -100.0 -100.0 ... -81.0 -81.0

Zp1 (k_p1) float32 0.0 -10.0

Zl (k_l) float32 0.0

YC (tile, j, i) float32 31.83 30.98 30.11 ... 20.12 19.18 18.23

XC (tile, j, i) float32 -99.5 -99.5 -99.5 ... -80.5 -80.5 -80.5

YG (tile, j_g, i_g) float32 32.26 31.41 30.55 ... 20.6 19.65 18.7

... ...

* k_p1 (k_p1) int32 0 1

* k_u (k_u) int32 0

* nb (nb) float32 0.0 1.0 2.0 3.0

* nv (nv) float32 0.0 1.0

* tile (tile) int32 10

* time (time) datetime64[ns] 2004-08-01T12:00:00 ... 2006-10-31T12:00:00

Data variables:

THETA (time, k, tile, j, i) float32 nan nan nan ... 29.62 29.82 29.94

Attributes: (12/63)

acknowledgement: This research was carried out by the Jet...

author: Ian Fenty and Ou Wang

cdm_data_type: Grid

comment: Fields provided on the curvilinear lat-l...

Conventions: CF-1.8, ACDD-1.3

coordinates_comment: Note: the global 'coordinates' attribute...

... ...

time_coverage_end: 2004-08-02T00:00:00

time_coverage_resolution: P1D

time_coverage_start: 2004-08-01T00:00:00

title: ECCO Ocean Temperature and Salinity - Da...

uuid: c709dbee-4168-11eb-af01-0cc47a3f4aa1

history_json: [{"$schema":"https:\/\/harmony.earthdata...- tile: 1

- j_g: 20

- i_g: 16

- k_p1: 2

- k_l: 1

- j: 20

- i: 16

- nb: 4

- k_u: 1

- time: 276

- k: 1

- nv: 2

- XG(tile, j_g, i_g)float32-100.0 -100.0 ... -81.0 -81.0

- long_name :

- longitude of 'southwest' corner of tracer grid cell

- units :

- degrees_east

- coordinate :

- YG XG

- comment :

- Nonuniform grid spacing. Note: 'southwest' does not correspond to geographic orientation but is used for convenience to describe the computational grid. See MITgcm dcoumentation for details.

- coverage_content_type :

- coordinate

- standard_name :

- longitude

- origname :

- XG

- fullnamepath :

- /XG

array([[[-100., -100., -100., -100., -100., -100., -100., -100., -100., -100., -100., -100., -100., -100., -100., -100.], [ -99., -99., -99., -99., -99., -99., -99., -99., -99., -99., -99., -99., -99., -99., -99., -99.], [ -98., -98., -98., -98., -98., -98., -98., -98., -98., -98., -98., -98., -98., -98., -98., -98.], [ -97., -97., -97., -97., -97., -97., -97., -97., -97., -97., -97., -97., -97., -97., -97., -97.], [ -96., -96., -96., -96., -96., -96., -96., -96., -96., -96., -96., -96., -96., -96., -96., -96.], [ -95., -95., -95., -95., -95., -95., -95., -95., -95., -95., -95., -95., -95., -95., -95., -95.], [ -94., -94., -94., -94., -94., -94., -94., -94., -94., -94., -94., -94., -94., -94., -94., -94.], [ -93., -93., -93., -93., -93., -93., -93., -93., -93., -93., -93., -93., -93., -93., -93., -93.], [ -92., -92., -92., -92., -92., -92., -92., -92., -92., -92., -92., -92., -92., -92., -92., -92.], [ -91., -91., -91., -91., -91., -91., -91., -91., -91., -91., -91., -91., -91., -91., -91., -91.], [ -90., -90., -90., -90., -90., -90., -90., -90., -90., -90., -90., -90., -90., -90., -90., -90.], [ -89., -89., -89., -89., -89., -89., -89., -89., -89., -89., -89., -89., -89., -89., -89., -89.], [ -88., -88., -88., -88., -88., -88., -88., -88., -88., -88., -88., -88., -88., -88., -88., -88.], [ -87., -87., -87., -87., -87., -87., -87., -87., -87., -87., -87., -87., -87., -87., -87., -87.], [ -86., -86., -86., -86., -86., -86., -86., -86., -86., -86., -86., -86., -86., -86., -86., -86.], [ -85., -85., -85., -85., -85., -85., -85., -85., -85., -85., -85., -85., -85., -85., -85., -85.], [ -84., -84., -84., -84., -84., -84., -84., -84., -84., -84., -84., -84., -84., -84., -84., -84.], [ -83., -83., -83., -83., -83., -83., -83., -83., -83., -83., -83., -83., -83., -83., -83., -83.], [ -82., -82., -82., -82., -82., -82., -82., -82., -82., -82., -82., -82., -82., -82., -82., -82.], [ -81., -81., -81., -81., -81., -81., -81., -81., -81., -81., -81., -81., -81., -81., -81., -81.]]], dtype=float32) - Zp1(k_p1)float320.0 -10.0

- long_name :

- depth of tracer grid cell interface

- units :

- m

- positive :

- up

- comment :

- Contains one element more than the number of vertical layers. First element is 0m, the depth of the upper interface of the surface grid cell. Last element is the depth of the lower interface of the deepest grid cell.

- coverage_content_type :

- coordinate

- standard_name :

- depth

- origname :

- Zp1

- fullnamepath :

- /Zp1

array([ 0., -10.], dtype=float32)

- Zl(k_l)float320.0

- units :

- m

- positive :

- up

- coverage_content_type :

- coordinate

- standard_name :

- depth

- long_name :

- depth of the top face of tracer grid cells

- comment :

- First element is 0m, the depth of the top face of the first tracer grid cell (ocean surface). Last element is the depth of the top face of the deepest grid cell. The use of 'l' in the variable name follows the MITgcm convention for ocean variables in which the lower (l) face of a tracer grid cell on the logical grid corresponds to the top face of the grid cell on the physical grid. In other words, the logical vertical grid of MITgcm ocean variables is inverted relative to the physical vertical grid.

- origname :

- Zl

- fullnamepath :

- /Zl

array([0.], dtype=float32)

- YC(tile, j, i)float3231.83 30.98 30.11 ... 19.18 18.23

- long_name :

- latitude of tracer grid cell center

- units :

- degrees_north

- coordinate :

- YC XC

- bounds :

- YC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- latitude

- origname :

- YC

- fullnamepath :

- /YC

array([[[31.833044, 30.976704, 30.112358, 29.240158, 28.360258, 27.472822, 26.578028, 25.676054, 24.767094, 23.851347, 22.929024, 22.00034 , 21.065521, 20.1248 , 19.178421, 18.226631], [31.833044, 30.976704, 30.112358, 29.240158, 28.360258, 27.472822, 26.578028, 25.676054, 24.767094, 23.851347, 22.929024, 22.00034 , 21.065521, 20.1248 , 19.178421, 18.226631], [31.833044, 30.976704, 30.112358, 29.240158, 28.360258, 27.472822, 26.578028, 25.676054, 24.767094, 23.851347, 22.929024, 22.00034 , 21.065521, 20.1248 , 19.178421, 18.226631], [31.833044, 30.976704, 30.112358, 29.240158, 28.360258, 27.472822, 26.578028, 25.676054, 24.767094, 23.851347, 22.929024, 22.00034 , 21.065521, 20.1248 , 19.178421, 18.226631], [31.833044, 30.976704, 30.112358, 29.240158, 28.360258, 27.472822, 26.578028, 25.676054, 24.767094, 23.851347, 22.929024, 22.00034 , 21.065521, 20.1248 , 19.178421, 18.226631], ... [31.833044, 30.976704, 30.112358, 29.240158, 28.360258, 27.472822, 26.578028, 25.676054, 24.767094, 23.851347, 22.929024, 22.00034 , 21.065521, 20.1248 , 19.178421, 18.226631], [31.833044, 30.976704, 30.112358, 29.240158, 28.360258, 27.472822, 26.578028, 25.676054, 24.767094, 23.851347, 22.929024, 22.00034 , 21.065521, 20.1248 , 19.178421, 18.226631], [31.833044, 30.976704, 30.112358, 29.240158, 28.360258, 27.472822, 26.578028, 25.676054, 24.767094, 23.851347, 22.929024, 22.00034 , 21.065521, 20.1248 , 19.178421, 18.226631], [31.833044, 30.976704, 30.112358, 29.240158, 28.360258, 27.472822, 26.578028, 25.676054, 24.767094, 23.851347, 22.929024, 22.00034 , 21.065521, 20.1248 , 19.178421, 18.226631], [31.833044, 30.976704, 30.112358, 29.240158, 28.360258, 27.472822, 26.578028, 25.676054, 24.767094, 23.851347, 22.929024, 22.00034 , 21.065521, 20.1248 , 19.178421, 18.226631]]], dtype=float32) - XC(tile, j, i)float32-99.5 -99.5 -99.5 ... -80.5 -80.5

- long_name :

- longitude of tracer grid cell center

- units :

- degrees_east

- coordinate :

- YC XC

- bounds :

- XC_bnds

- comment :

- nonuniform grid spacing

- coverage_content_type :

- coordinate

- standard_name :

- longitude

- origname :

- XC

- fullnamepath :

- /XC

array([[[-99.5, -99.5, -99.5, -99.5, -99.5, -99.5, -99.5, -99.5, -99.5, -99.5, -99.5, -99.5, -99.5, -99.5, -99.5, -99.5], [-98.5, -98.5, -98.5, -98.5, -98.5, -98.5, -98.5, -98.5, -98.5, -98.5, -98.5, -98.5, -98.5, -98.5, -98.5, -98.5], [-97.5, -97.5, -97.5, -97.5, -97.5, -97.5, -97.5, -97.5, -97.5, -97.5, -97.5, -97.5, -97.5, -97.5, -97.5, -97.5], [-96.5, -96.5, -96.5, -96.5, -96.5, -96.5, -96.5, -96.5, -96.5, -96.5, -96.5, -96.5, -96.5, -96.5, -96.5, -96.5], [-95.5, -95.5, -95.5, -95.5, -95.5, -95.5, -95.5, -95.5, -95.5, -95.5, -95.5, -95.5, -95.5, -95.5, -95.5, -95.5], [-94.5, -94.5, -94.5, -94.5, -94.5, -94.5, -94.5, -94.5, -94.5, -94.5, -94.5, -94.5, -94.5, -94.5, -94.5, -94.5], [-93.5, -93.5, -93.5, -93.5, -93.5, -93.5, -93.5, -93.5, -93.5, -93.5, -93.5, -93.5, -93.5, -93.5, -93.5, -93.5], [-92.5, -92.5, -92.5, -92.5, -92.5, -92.5, -92.5, -92.5, -92.5, -92.5, -92.5, -92.5, -92.5, -92.5, -92.5, -92.5], [-91.5, -91.5, -91.5, -91.5, -91.5, -91.5, -91.5, -91.5, -91.5, -91.5, -91.5, -91.5, -91.5, -91.5, -91.5, -91.5], [-90.5, -90.5, -90.5, -90.5, -90.5, -90.5, -90.5, -90.5, -90.5, -90.5, -90.5, -90.5, -90.5, -90.5, -90.5, -90.5], [-89.5, -89.5, -89.5, -89.5, -89.5, -89.5, -89.5, -89.5, -89.5, -89.5, -89.5, -89.5, -89.5, -89.5, -89.5, -89.5], [-88.5, -88.5, -88.5, -88.5, -88.5, -88.5, -88.5, -88.5, -88.5, -88.5, -88.5, -88.5, -88.5, -88.5, -88.5, -88.5], [-87.5, -87.5, -87.5, -87.5, -87.5, -87.5, -87.5, -87.5, -87.5, -87.5, -87.5, -87.5, -87.5, -87.5, -87.5, -87.5], [-86.5, -86.5, -86.5, -86.5, -86.5, -86.5, -86.5, -86.5, -86.5, -86.5, -86.5, -86.5, -86.5, -86.5, -86.5, -86.5], [-85.5, -85.5, -85.5, -85.5, -85.5, -85.5, -85.5, -85.5, -85.5, -85.5, -85.5, -85.5, -85.5, -85.5, -85.5, -85.5], [-84.5, -84.5, -84.5, -84.5, -84.5, -84.5, -84.5, -84.5, -84.5, -84.5, -84.5, -84.5, -84.5, -84.5, -84.5, -84.5], [-83.5, -83.5, -83.5, -83.5, -83.5, -83.5, -83.5, -83.5, -83.5, -83.5, -83.5, -83.5, -83.5, -83.5, -83.5, -83.5], [-82.5, -82.5, -82.5, -82.5, -82.5, -82.5, -82.5, -82.5, -82.5, -82.5, -82.5, -82.5, -82.5, -82.5, -82.5, -82.5], [-81.5, -81.5, -81.5, -81.5, -81.5, -81.5, -81.5, -81.5, -81.5, -81.5, -81.5, -81.5, -81.5, -81.5, -81.5, -81.5], [-80.5, -80.5, -80.5, -80.5, -80.5, -80.5, -80.5, -80.5, -80.5, -80.5, -80.5, -80.5, -80.5, -80.5, -80.5, -80.5]]], dtype=float32) - YG(tile, j_g, i_g)float3232.26 31.41 30.55 ... 19.65 18.7

- long_name :

- latitude of 'southwest' corner of tracer grid cell